The AI Act is Coming – Are YOU Ready?

The AI Act is LIVE in 2026

The days of ‘move fast and break things’ are officially over for AI development in the EU. The AI Act will go into full effect on August 2, 2026, meaning we all have to start auditing and assessing the efficiency tools we’ve come to love.

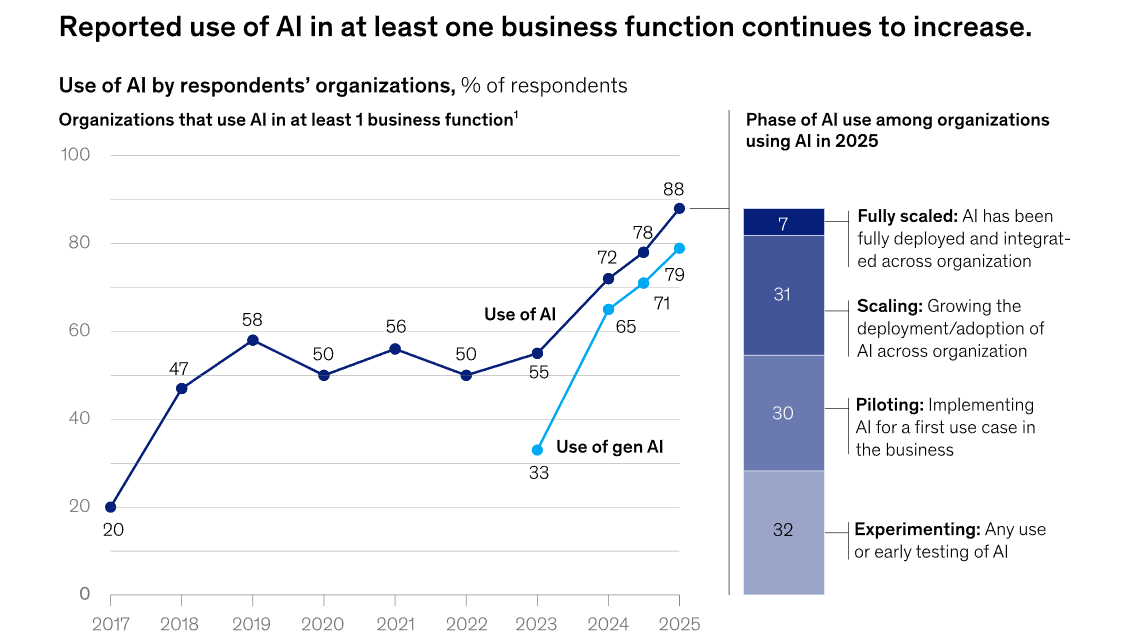

In fact, reports show that 88% of organizations use AI for at least one business function, with generative AI use reaching as high as 79%.

Source: McKinsey & Company

If your business deploys an AI system in its day-to-day operations within the EU or you have EU-based data subjects, then you’ll have to comply with the AI Act. But that doesn’t mean giving up on all third-party AI models within your environment.

Instead, protect yourself and your data by future-proofing your AI models. Let’s get into it.

The Risk-Based Data Challenge

One of the most notable features of the AI Act is its 4-level risk framework.

The first two—Minimal Risk and Limited Risk—largely deal with low-risk AI models with requirements like an ‘AI-generated content’ label or user consent (learn more about the 4 risk levels HERE).

There’s also Unacceptable Risk (e.g., AI systems that allow “social scoring” or threaten people’s fundamental rights), however, these cases are rarer. So, let’s focus on the most relevant level: High Risk.

High Risk: High-risk AI systems, such as AI-based medical software or AI systems used for recruitment, must comply with strict requirements, including risk-mitigation systems, high-quality data sets, clear user information, human oversight, etc.

High-Risk AI Systems

‘High-Risk’ AI models are interesting because the AI Act still allows you to use them, but only when regulated correctly. Such AI models must be developed using datasets that meet the following three quality criteria:

- Relevance and Representativeness: Training, validation, and testing datasets must be relevant and sufficiently representative of the context and the individual(s) in relation to whom the system is intended to be used.

- Completeness and Accuracy: To the greatest extent possible, datasets must be free of errors and complete in view of the system’s intended purpose.

- Statistical Properties: Where applicable, the data must have the appropriate statistical properties to ensure it reflects the specific geographical, contextual, behavioral, or functional setting where the system will operate.

The most important takeaway here is that the AI Act mandates a shift from simply complying with data protection law (e.g., GDPR) to actively managing the quality, integrity, and ethical characteristics of the data that underpins high-risk AI.

All with the goal of ensuring full traceability and accountability from raw data to final model deployment.

High-Risk but Compliant

The AI Act also lays the groundwork for what it calls Data Governance and Management Practices. In other words, six actionable steps you can apply to still deploy your potentially high-risk AI system, but now in a demonstrably low-risk way. Check them out below:

- The entire data collection process, incl. the data origin & its original purpose of collection.

- Document all ‘data-prep’ operations (annotation, labeling, updating, etc.).

- Systematically examine your datasets for possible biases.

- Deploy relevant measures to detect, prevent, and mitigate all biases.

- Form clear assumptions about the info the data is meant to measure & represent (incl. an assessment of the availability, quantity, and suitability of the needed datasets).

- Locate any data gaps or shortcomings that prevent compliance & document how such gaps are being addressed.

Your Future-Proof AI System

Armed with the information above, you can assess and modify your own AI system to ensure it’s compliant with the AI Act. But since the enforcement date is rapidly approaching, here are a few other tools to help you achieve compliance.

Starting with the AI Act itself, which provides a free Compliance Checker for you to fill out and perform your own assessment.

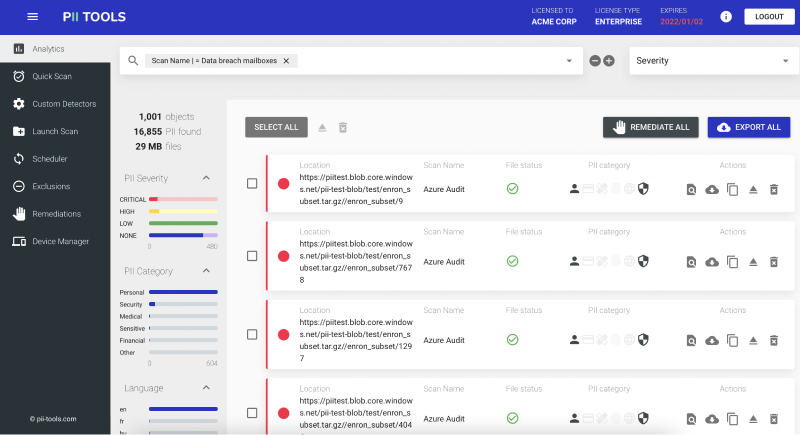

Next, we have the AI Data Protector by PII Tools. The AI Data Protector scans all your datasets BEFORE any third-party AI model can get its hands on them. It discovers and helps you remediate all at-risk data, completely neutralizing potentially risky information you wouldn’t want to feed to an outside AI tool.

Whatever method you choose, you can rest easy at night knowing your business is compliant with the now-enforceable EU AI Act. And that’s both good for you and great for your data subjects!

Is YOUR Data Ready for the AI Act? Find Out with a FREE PII Tools Demo!