The EU Artificial Intelligence Act will go into effect soon, and we can expect many similar regulations to follow. Here are the TOP 3 ways to still get the most out of helpful AI tools while avoiding fines and achieving compliance.

EU AI Act

Although technology-related regulations tend to lag behind the actual technology itself, institutions are quickly adapting to the rapid rise of AI. The most notable example of this is taking place in the European Union, which is the first government entity in the world to release a regulation on how businesses and organizations can safely use AI.

The EU AI Act is “a European regulation on artificial intelligence (AI)”, most recognizable by its three major risk classifications. It provides a framework that everything from small start-ups to giant corporations can use to “foster responsible artificial intelligence development and deployment in the EU”.

Now that we know what the EU AI Act is, let’s get into how it helps organizations avoid AI-related risk. (Or if you’re looking for a more detailed breakdown of the AI Act, then you’ll find everything you need to know here.)

#1. Categorizing Risk for AI Systems

The first step to avoiding risk in the age of AI regulation is to categorize the AI tools and programs you use at work depending on their risk level. The EU AI Act splits AI systems into three main risk categories:

- Unacceptable risk: Systems and applications include any that overrestrict people’s freedom of choice, manipulate, or discriminate. Examples include AI models used for:

a. Government-run social scoring based on social behavior or personal traits;

b. Predictive policing;

c. Data scraping for facial recognition databases. - High-Risk: Subject to strict obligations that must be met before a system can be placed on the market or deployed. Examples include AI models used for:

a. Employment, workers management, and access to self-employment, e.g., an AI model that scans CVs to rank job applicants;

b. Educational and vocational training, e.g., AI tools used to grade exams;

c. Law enforcement, e.g., AI systems used to evaluate the reliability of evidence. - Limited-Risk: AI applications not explicitly banned or listed as High-Risk or Unacceptable are largely left unregulated but are still subject to a number of ‘transparency obligations’. Examples include AI models used for:

a. Communication with people, e.g., Chatbots;

b. Text generation;

c. Image generation.

#2. Evaluating Risk for AI Systems

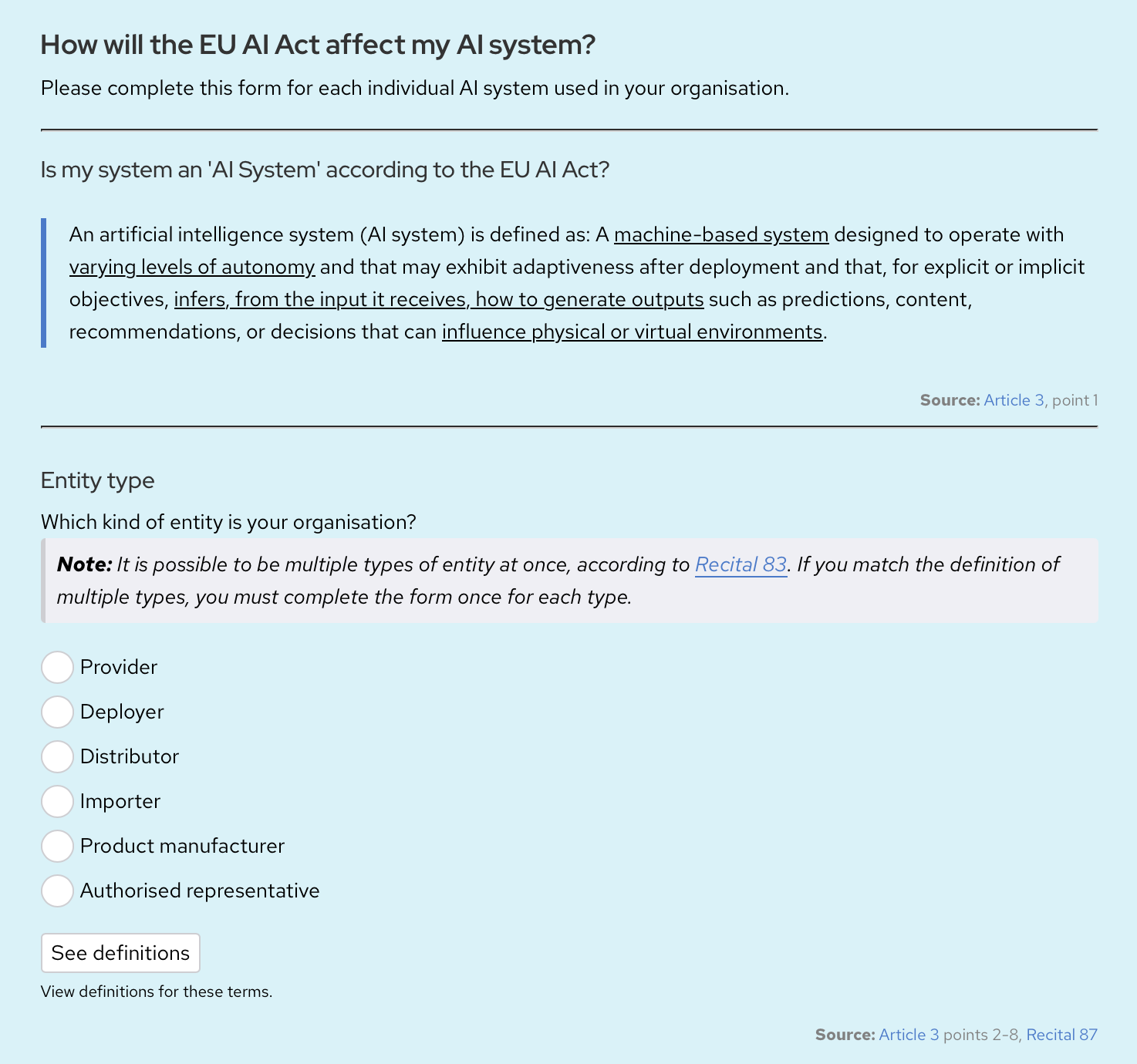

We’re aware of the EU AI Act’s risk categories, but how can you figure out which on your AI system falls into?

Luckily for us, the EU AI Act comes with its own Compliance Checker. Providers, deployers, distributors, and more can fill out this helpful form online for free and immediately discover how the EU views their AI system.

The results also come with an explanation and links to the various Articles in the AI Act that likely apply to the AI models and tools you use based on your organization’s specific role, the sort of data your process, your location, and more.

The Compliance Checker is a quick and easy tool anyone can use to learn where they stand with the EU AI Act, if there are any changes that need to be made to achieve compliance, and how they can avoid risk while still deploying some useful AI tools at work.

Source: EU Artificial Intelligence Act

#3 General-Purpose AI Models

The third and final way to ensure AI data privacy and compliance with the EU AI Act is to be able to recognize and deploy only general-purpose AI models.

The EU AI Act defines general-purpose AI models as “displaying significant generality and being capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications”.

In other words, when deciding to deploy a new AI product in your business, you’ll want to use only those made by providers who demonstrate a good understanding of their models along the entire AI value chain.

General-purpose AI models allow anyone “downstream” (i.e., you, the user) to both integrate such models into their AI systems and to fulfill their own obligations under the AI Act.

AI Data Protector

Those were the TOP 3 steps you can take today to avoid risk and achieve compliance with forthcoming AI regulations. But there’s also one final way you can truly ensure your company data is safe from risky AI models.

The AI Data Protector by PII Tools is a PII detection API that automatically screens every file as it’s uploaded to your system. It then categorizes the results by risk assessment, allowing you to remediate any potentially at-risk data BEFORE feeding it to a third-party AI model.

Do what’s best for your data privacy and your organization by applying the three steps described here, and then take your EU AI Act compliance goals a step further by deploying the AI Data Protector. Only then can you eliminate all risks while still getting the most out of any helpful AI tools!

Only Feed Clean & Safe Data to AI Models – Now Possible with the AI Data Protector!